I often encounter scenarios where query-tuning isn’t just about speed-it’s about understanding how SQL Server processes data and which patterns yield the best results. Recently, I explored how different query patterns for filtering dates affect performance. For my tests, I used SET STATISTICS IO ON and SET STATISTICS TIME ON.

Setup

I tasked myself with retrieving user activity from the StackOverflow database Users table, specifically for the year 2017, since the database I have only has data up through 2018. The table has millions of rows, so I thought this would be a perfect example. A quick note: there is a non-clustered index covering this query, which I’ve scripted below for you to check. LastAccessDate is a datetime column.

USE [StackOverflow]

GO

SET ANSI_PADDING ON

GO

CREATE NONCLUSTERED INDEX [IX_LastAccessDate_DisplayName_Reputation] ON [dbo].[Users]

(

[LastAccessDate] ASC,

[DisplayName] ASC,

[Reputation] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, SORT_IN_TEMPDB = OFF, DROP_EXISTING = OFF, ONLINE = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON, FILLFACTOR = 100, OPTIMIZE_FOR_SEQUENTIAL_KEY = OFF) ON [PRIMARY]

GOHere are the six approaches I tested, along with my results.

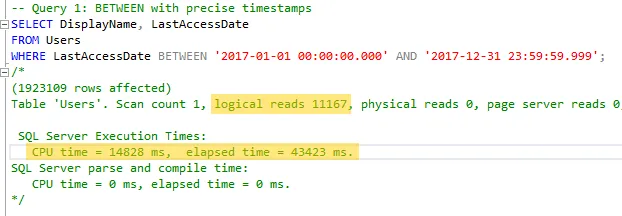

1. For this query, I used BETWEEN with precise timestamps. This is probably the most common method I see in the wild.

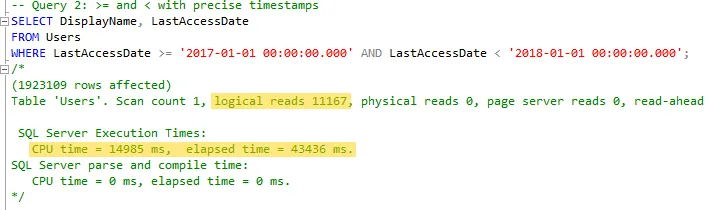

2. Next, I used >= and < again with precise timestamps. This method is also relatively common.

I’d argue there’s nothing unusual so far. Both queries are running fast enough for my needs, and I got the data I expected. Lets keep going.

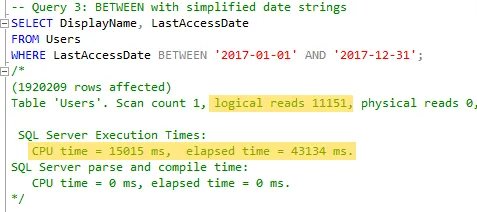

3. Next, I used BETWEEN again, but without the precise timestamps. I wanted to see if using less precise date format would make my query non-sargable.

We see no significant degradation to the performance of the query in terms of speed or reads, but if you look closely, I actually got a few thousand fewer rows than my other two tests. We’ll talk more about that later.

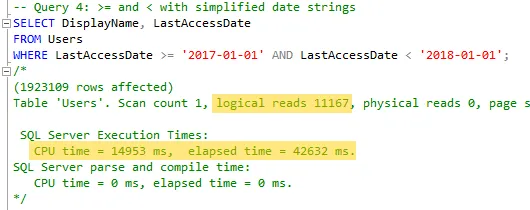

4. After that, I decided to use the same idea for >= and <. To which there was nothing significant to report.

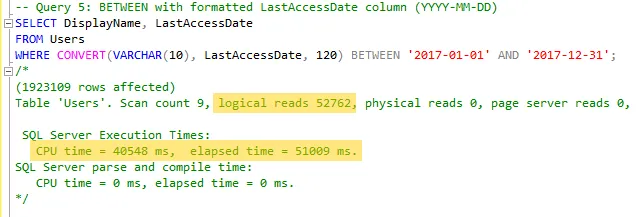

5. Next, I chose to use BETWEEN again, but convert the datetime value to a VARCHAR to manipulate the date format.

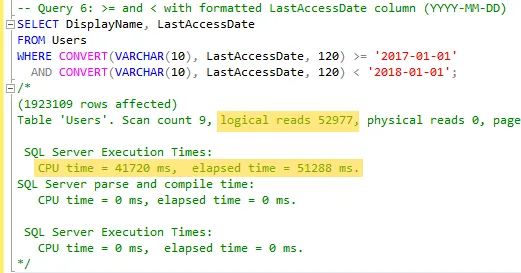

6. Lastly, doing this but with >= and < and converting the datetime value to a VARCHAR, as I did in step 5.

Final Thoughts

Clearly, logical reads skyrocket when using CONVERT or similar formatting functions. This happens because they render queries non-sargable and lead to table scans instead of index seeks. Additionally, simplified date ranges using >= and < outperform BETWEEN due to better clarity and consistency, particularly with time boundaries. Finally, subtle errors like the fewer rows returned in Query 3 emphasize the importance of precise filtering logic. Needless to say, I’ll be avoiding CONVERT and BETWEEN on my datetime columns from now on.

Since I’m writing this on December 31st, 2024, I want to wish everyone a happy New Year! Thank you for reading!